Gigabyte G32QC Review

- Home

- /Monitor Reviews

- /Gigabyte G32QC

Author: Adam Simmons Date published: October 16th 2020

Introduction

For those wanting an immersive experience or a monitor they can sit a little way back from if desired, the ~32” screen size can be a good fit. The Gigabyte G32QC offers this, coupling it with a 2560 x 1440 (WQHD) resolution for comfortable pixel density and a high refresh rate VA panel. Additional features of note include a moderate curve to the screen, support for Adaptive-Sync (including AMD FreeSync Premium Pro) and VESA DisplayHDR 400 certification. We put this model through its paces in our usual testing gauntlet.

Specifications

The monitor is based on a 31.5” Samsung SVA (‘Super’ Vertical Alignment) panel with 1500R curve. More specifically, a Samsung CELL with custom backlighting solution. The monitor supports true 8-bit colour, a 165Hz refresh rate and 2560 x 1440 (WQHD) resolution. A 1ms MPRT (Moving Picture Response Time) is specified using the included strobe backlight setting. As usual, don’t pay too much attention to specified response times – we provide a far more realistic assessment of such things in the review. Some of the key ‘talking points’ for this monitor have been highlighted in blue below, for your reading convenience.

Screen size: 31.5 inchesPanel: Samsung SVA (‘Super’ Vertical Alignment) LCD*

Native resolution: 2560 x 1440

Typical maximum brightness: 350 cd/m² (400 cd/m²+ HDR)

Colour support: 16.7 million (8-bits per subpixel without dithering)**

Response time (MPRT): 1ms

Refresh rate: 165Hz (variable, with Adaptive-Sync)

Weight: 7.5kg

Contrast ratio: 3000:1

Viewing angle: 178º horizontal, 178º vertical

Power consumption: 75W (max)

Backlight: WLED (White Light Emitting Diode)

Typical price as reviewed: £350 ($340 USD)

*Following Samsung’s suspension of LCD production, the panel has been replaced by an alternative VA panel produced by CSOT or AUO. Similar image characteristics are expected, with the updated model designated G32QC A.

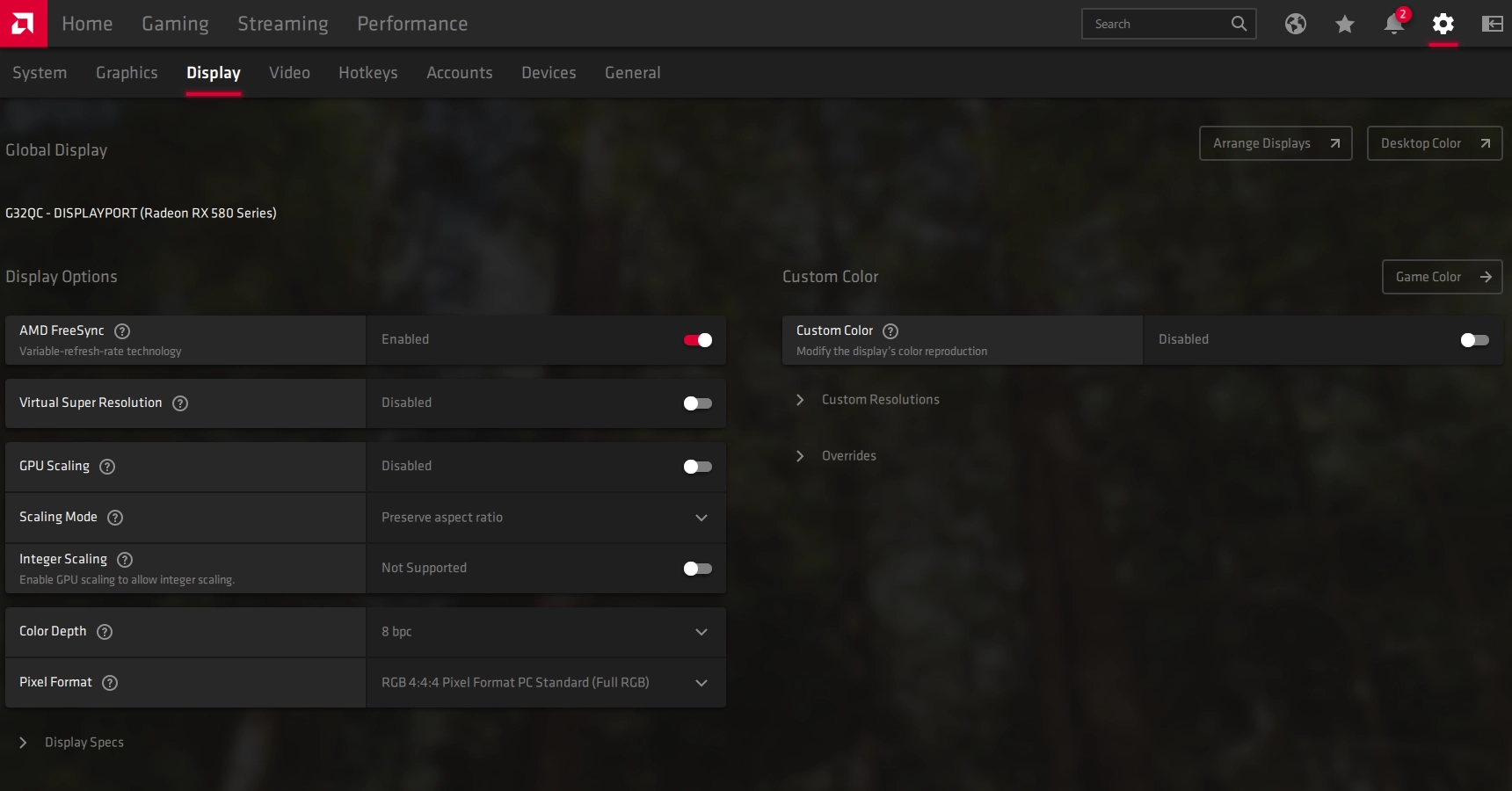

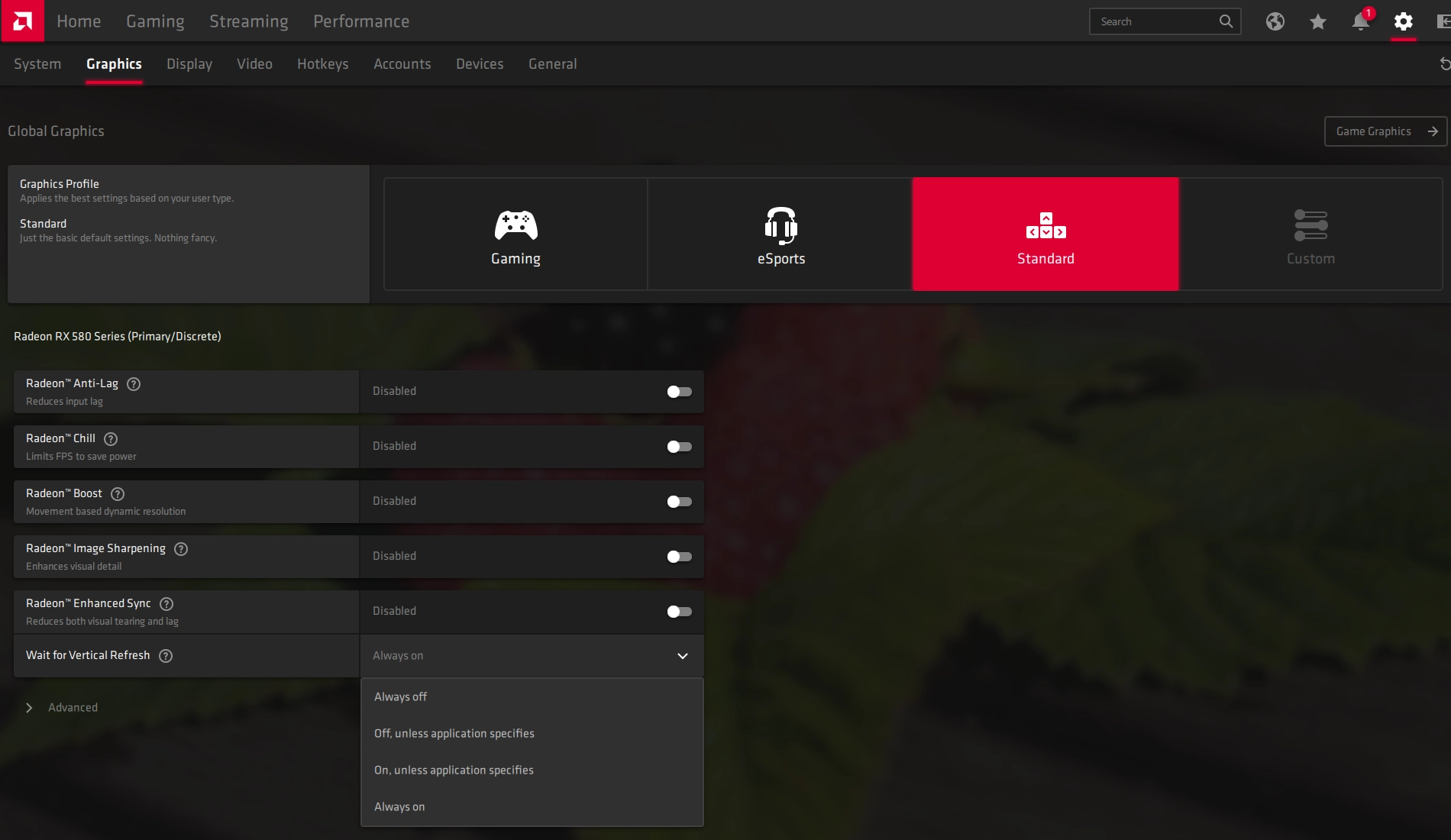

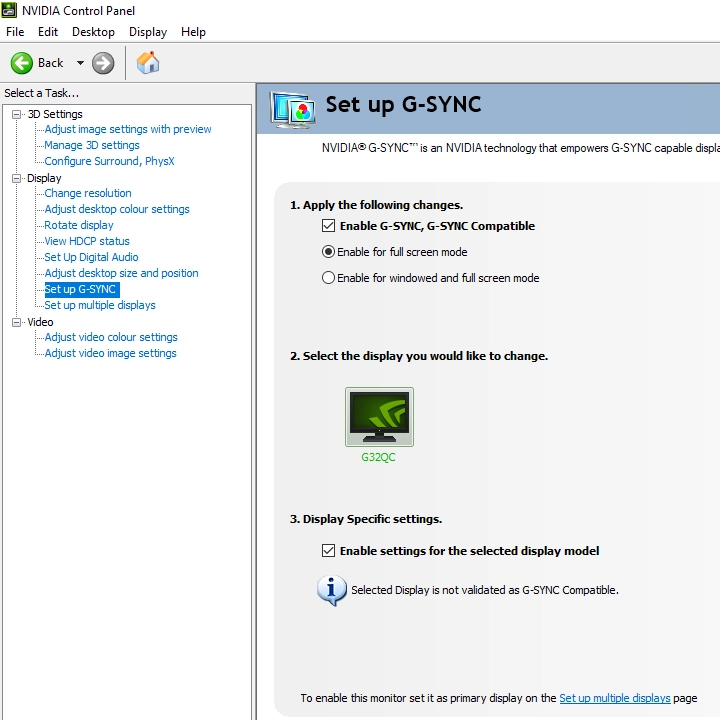

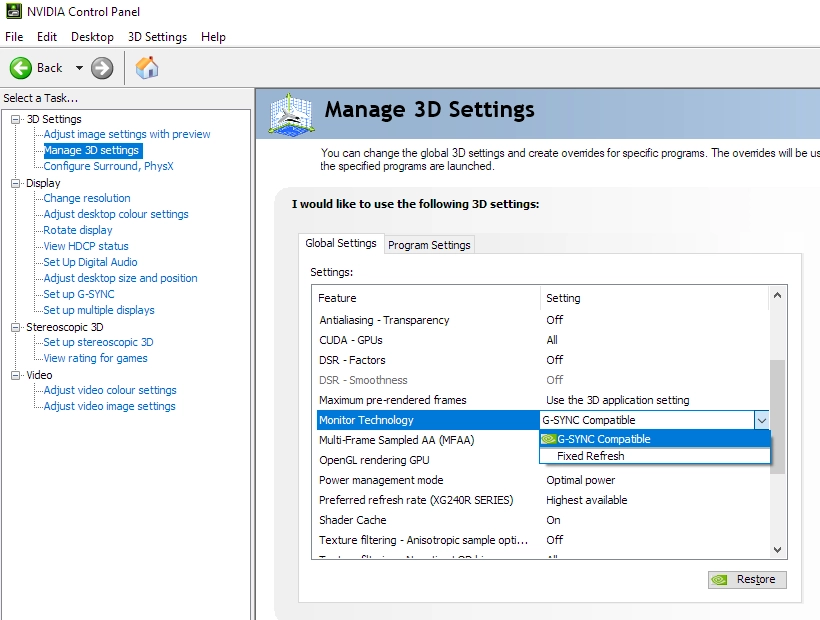

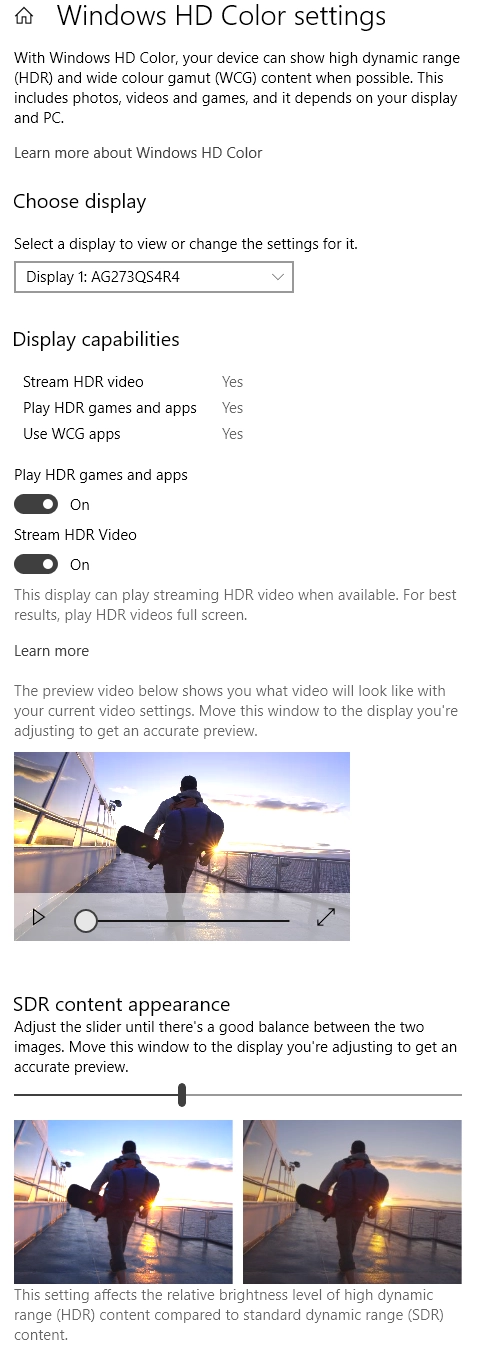

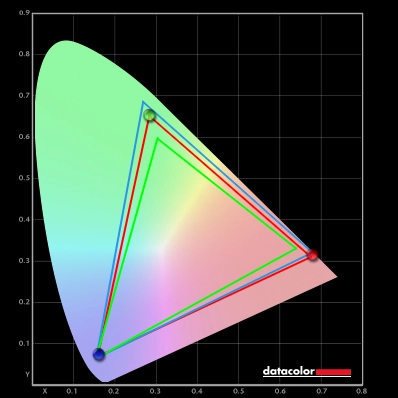

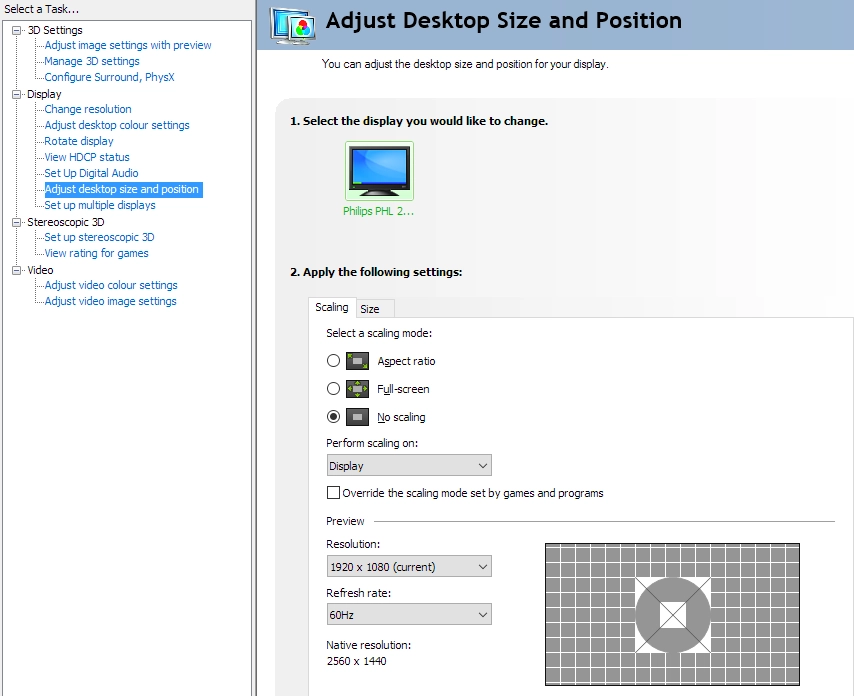

**10-bit can be selected in the graphics driver at 120Hz or below when using DP and running at the native resolution. 12-bit can be selected at 60Hz when using HDMI at the native resolution. The panel used is only an 8-bit panel, but the monitor’s scaler can add a dithering stage to facilitate work with higher bit depth content. As an Amazon Associate I earn from qualifying purchases made using the below link. Where possible, you’ll be redirected to your nearest store. Further information on supporting our work. Buy from Amazon AMD FreeSync is a variable refresh rate technology, an AMD-specific alternative to Nvidia G-SYNC. Where possible, the monitor dynamically adjusts its refresh rate so that it matches the frame rate being outputted by the GPU. Both our responsiveness article and the G-SYNC article linked to explore the importance of these two elements being synchronised. At a basic level, a mismatch between the frame rate and refresh rate can cause stuttering (VSync on) or tearing and juddering (VSync off). FreeSync also boasts reduced latency compared to running with VSync enabled, in the variable frame rate environment in which it operates. FreeSync requires a compatible AMD GPU such as the Radeon RX 580 used in our test system. There is a list of GPUs which support the technology here, with the expectation that future AMD GPUs will support the feature too. The monitor itself must support ‘VESA Adaptive-Sync’ for at least one of its display connectors, as this is the protocol that FreeSync uses. The Gigabyte G32QC supports FreeSync Premium Pro via DP 1.2a+ and HDMI 2.0 on compatible GPUs and systems. Note that HDR can be activated (at the same time as FreeSync) as well. You need to make sure ‘AMD FreeSync Premium Pro’ is set to ‘ON’ in the ‘Gaming’ section of the OSD. On the GPU driver side recent AMD drivers make activation of the technology very simple and something that usually occurs automatically. You should ensure the GPU driver is setup correctly to use FreeSync, so open ‘AMD Radeon Software’, click ‘Settings’ (cog icon towards top right) and click on ‘Display’. You should then ensure that the first slider, ‘Radeon FreeSync’ is set to ‘Enabled’ as shown below. To configure VSync, open ‘AMD Radeon Software’. Click ‘Settings’ (cog icon towards top right) and click ‘Graphics’. The setting is listed as ‘Wait for Vertical Refresh’. This configures it globally, but if you wish to configure it for individual games click ‘Game Graphics’ towards the top right. The default is ‘Off, unless application specifies’ which means that VSync will only be active if you enable it within the game itself, if there is such an option. Such an option does usually exist – it may be called ‘sync every frame’ or something along those lines rather than simply ‘VSync’. Most users will probably wish to enable VSync when using FreeSync to ensure that they don’t get any tearing. You’d therefore select either the third or fourth option in the list, shown in the image below. Above this dropdown list there’s a toggle for ‘Radeon Enhanced Sync’. This is an alternative to VSync which allows the frame rate to rise above the refresh rate (no VSync latency penalty) whilst potentially keeping the experience free from tearing or juddering. This requires that the frame rate comfortably exceeds the refresh rate, not just peaks slightly above it. We won’t be going into this in detail as it’s a GPU feature rather than a monitor feature. As usual we tested various game titles using AMD FreeSync and found the experience similar across the range. Any issues affecting one title but not another suggests a game or GPU driver issues rather than a monitor issue. We’ll therefore keep things simple by focusing on a single title for this section; Battlefield V. The excellent flexibility with the in-game graphics options allows the full gamut of refresh rates supported by the monitor to be assessed. Our Radeon RX 580 isn’t particularly powerful so it wasn’t easy maintaining a solid 165fps on this title. Even with fairly low graphics settings it was common to see dips to around 100fps. Without a VRR technology like FreeSync in place, such dips would cause obvious tearing (VSync off) or stuttering (VSync on). Obvious to us and others sensitive to tearing and stuttering, a least. The reduction in frame rate has the usual effect of reducing ‘connected feel’ and increasing perceived blur due to eye movement, too. Which isn’t something FreeSync can assist with. Setting the graphics settings higher or where action became more intense resulted in more significant dips, well into the double digits. ‘Connected feel’ dropped off further and perceived blur due to eye movement was further increased. But FreeSync fulfilled its function to get rid of tearing and stuttering from frame and refresh rate mismatches. The overshoot we explored earlier at 165Hz was now somewhat stronger. But we still wouldn’t describe it as extreme, nor was it particularly widespread. As common for a monitor without a G-SYNC module, there’s no variable overdrive. The pixel overdrive is therefore tuned for the maximum refresh rate and isn’t re-tuned as refresh rate decreases. Things would ideally be slackened off a bit to prevent some of this stronger overshoot – but we still found the ‘Balance’ setting the best tuned regardless of refresh rate. With frame rate dips below 48fps, LFC (Low Framerate Compensation) was used to keep tearing and stuttering at bay. The monitor stuck to a multiple of the frame rate with its refresh rate. We observed noticeable stuttering and sometimes flickering when passing the LFC boundary – the stuttering is very commonly observed and a degree of flickering is often observed on VA models. Some flickering was also observed from time to time within the main VRR window, where there were significant fluctuations in frame rate. VA panels are particularly sensitive to the voltage changes that accompany such sudden and dramatic refresh rate changes, so this sort of flickering is common. We didn’t find it too strong or obvious in this case, but as with many things it’s subjective. As noted earlier, AMD FreeSync makes use of Adaptive-Sync technology on a compatible monitor. As of driver version 417.71, users with Nvidia GPUs (GTX 10 series and newer) and Windows 10 can also make use of this Variable Refresh Rate (VRR) technology. When a monitor is used in this way, it is something which Nvidia refers to as ‘G-SYNC Compatible’. Some models are specifically validated as G-SYNC compatible, which means they have been specifically tested by Nvidia and pass specific quality checks. With the G32QC, you need to connect the monitor up via DisplayPort and enable ‘AMD FreeSync Premium Pro’ in the ‘Gaming’ section of the OSD. This enables Adaptive-Sync on the monitor and will unlock the appropriate settings in Nvidia Control Panel. When you open up Nvidia Control Panel, you should then see ‘Set up G-SYNC’ listed in the ‘Display’ section. Ensure the ‘Enable G-SYNC, G-SYNC Compatible’ checkbox and ‘Enable settings for the selected display model’ is checked as shown below. Press OK, then turn the monitor off then on again so that it re-establishes connection – the technology should now be active. Our suggestions regarding use of VSync also apply, but you’re using Nvidia Control Panel rather than AMD Radeon Software to control this. The setting is found in ‘Manage 3D settings’ under ‘Vertical sync’, where the final option (‘Fast’) is equivalent to AMD’s ‘Enhanced Sync’ setting. You’ll also notice ‘G-SYNC Compatible’ listed under ‘Monitor Technology’ in this section, as shown below. Make sure this is selected (it should be if you’ve set everything up correctly in ‘Set up G-SYNC’). We’ve already introduced the Aim Stabilizer feature, its principles of operation and how it performs using specific tests. When using Aim Stabilizer or any strobe backlight feature, it’s vital that your frame rate matches the refresh rate of the display exactly. Otherwise you’re left with very clear stuttering or juddering. This is because there’s very little perceived blur due to eye movement to mask it. As with most strobe backlight technologies, you can’t use Adaptive-Sync at the same time as Aim Stabilizer. As explored using the UFO Motion Test for ghosting earlier, activating this feature significantly reduced perceived blur due to eye movement. We used the feature on a range of games but will simply focus on Battlefield V running at a constant 165Hz with the feature active. Our observations here are broadly similar whilst using the feature at 144Hz and 120Hz with appropriate matching frame rates. The setting did provide a reduction in perceived blur due to eye movement, keeping the environment more sharply focused during rapid turns. This made tracking and engaging enemies potentially a bit easier and could certainly give a competitive edge. However; the setting was far from the ‘cleanest’ strobe backlight setting we’ve seen. There was significant strobe crosstalk which was about as bold as the object itself in some cases. Some ‘smeary’ trailing remained, appearing different when compared to with Aim Stabilizer disabled due to fragmentation. These weaknesses certainly hampered the overall motion clarity. On top of this we observed moderate overshoot where some brighter shades were involved in the transition, in the form of bright ‘halo’ trailing. We observed some flashes of colour such as cyan and magenta in places when observing lighter coloured objects, too. Particularly slender edges. This is commonly observed on wide gamut models with strobe backlight settings and not unique to this model, but it was quite obvious in this case. On top of the flickering you naturally observe with a strobe backlight setting like this active and inability to use Adaptive-Sync at the same time, we don’t really feel this is an appealing feature as implemented here. Some users may still see some utility in it, but if competitive gameplay is important we don’t consider this model the best choice with or without this setting. On an ideal monitor, HDR (High Dynamic Range) involves the simultaneous display of very bright light shades and very deep dark shades. The monitor should also be able to display a very broad range of shades between these extremes, including highly saturated and vivid shades alongside more muted ones. The monitor would ideally support per-pixel illumination (e.g. backlightless technology such as OLED) or failing that offer a very large number of dimming zones with precise control. A solution such as FALD (Full Array Local Dimming) with a good number of dimming zones, for example. This would allow some areas of the screen to show high brightness whilst others remain very dim. Colour reproduction is also an important part of HDR, with the ultimate goal being support for a huge colour gamut, Rec. 2020. A more achievable near-term goal is support for at least 90% DCI-P3 (Digital Cinema Initiatives standard colour space) coverage. Finally, HDR makes use of at least 10-bit precision per colour channel, so its desirable that the monitor supports at least 10-bits per subpixel. The HDR10 pipeline is the most widely supported HDR standard used in HDR games and movies. And HDR10 is what’s supported here. For most games and other full screen applications that support HDR, the Gigabyte automatically switches into its HDR operating mode. As of the latest Windows 10 update, relevant HDR settings in Windows are found in ‘Windows HD Color settings’ which can be accessed via ‘Display settings’ (right click the desktop). Most game titles will activate HDR correctly when the appropriate in-game setting is selected. A minority of game titles that support HDR will only run in HDR if the setting is active in Windows as well. Specifically, the toggle which says ‘Play HDR games and apps’. If you want to view HDR movies on a compatible web browser, for example, you’d also need to activate the ‘Stream HDR Video’ setting. These settings are shown below. Also note that there’s a slider that allows you to adjust the overall balance of SDR content if HDR is active in Windows. This is really just a digital brightness slider, so you lose contrast by adjusting it. When viewing SDR content with HDR active in Windows things appear overly bright and somewhat washed out – quite flooded, as if gamma is too low. As we explore shortly, you lose access to many adjustments in the OSD, including gamma and colour channels. We’d recommend only activating HDR in Windows if you’re about to specifically use an HDR application that requires it, and have it deactivated when viewing normal SDR content on the monitor. For simplicity we’ll just focus our attention on two titles; Battlefield V and Shadow of the Tomb Raider. We’ve tested both titles on a broad range of monitors under HDR and we know they represent a good test of monitor HDR capability. The experience described here is largely dictated and limited by the screen itself. Although our testing here is focused on HDR PC gaming using DisplayPort, we made similar observations when viewing HDR video content on the Netflix app. There are some additional points to bear in mind if you wish to view such content. We also made observations using HDMI, which would be used when viewing HDR content on an HDR compatible games console for example, and things were very similar. As usual for HDR, the settings available in the monitor are greatly restricted, including the gamma control, sharpness and colour channels being greyed out. Unusually for HDR, brightness can be adjusted and no sharpness filter is applied by default. Some presets such as ‘Reader’ and ‘Movie’ apply a mild sharpness filter and ‘FPS’ a strong sharpness filter under HDR. This is the only aspect of the image they will change under HDR, however. We found the experience decidedly ‘non HDR-like’ with reduced brightness so preferred to just leave this at the default of ‘100’. The Gigabyte G32QC is VESA DisplayHDR 400 certified. This is the lowest level of certification offered by VESA, so only a basic HDR experience is provided. The colour gamut requirements are not strict at all here, but on the Gigabyte we recorded 90% DCI-P3 which is actually a box ticked even for higher tiers. This is shown in the graphic below, which featured earlier in the review. The red triangle shows the monitor’s colour gamut, the blue triangle DCI-P3 and green triangle sRGB. With the colour gamut providing a decent match to the DCI-P3 near-term target game developers have in mind, the gamut was put to quite good use. The oversaturated elements such as some overly reddish browns and yellowish greens with too much of a yellow push were not observed under HDR because of this. But things actually tended to look a bit undersaturated, almost as if gamma was too low. This was exaggerated peripherally where perceived gamma is reduced and therefore perceived saturation losses occur. Certain skin tones looked too reddish or overly tanned under SDR, but now they had the opposite problem and often looked a bit anaemic. There were some reasonable licks of vibrancy where the developers wanted there to be, but this lacked a certain vivacity than we’ve seen before under HDR – even on models with a similar colour gamut. Considering an array of what should be rich oranges and reds observed for various painted objects and roaring flames on both titles, for example, they just weren’t as eye-catching as they should be. There were some shades there that extended beyond the boundaries of sRGB, but the overall vibrancy was distinctly lacking compared to SDR even for these objects. This scene highlighted the nuanced shade variety for brighter shades nicely. The mist above the water and light streaming in from above showcased some very fine and natural gradients that were greatly enhanced by the improved nuanced shade variety. The bright elements such as the glint of sun on the water and light streaming in from up above looked reasonably bright, but didn’t have the sort of ‘pop’ we’ve seen from some models under HDR. There are two reasons for this. Firstly, the luminance isn’t all that high by HDR standards. Secondly, VESA DisplayHDR 400 doesn’t mandate local dimming and none was used here. The surrounding darker shades showed a significant lack of depth and the contrast between those and the brighter shades just wasn’t as strong as it could be. Unlike some models even at this HDR level and without local dimming included, no Dynamic Contrast was employed. So even if predominantly dark content was displayed, the backlight was pumping out just as much light as if the brightest content is displayed – not good for depth and atmosphere. You can of course manually reduce brightness, but you’d then have brighter shades with a distinctly non-HDR appearance and would have to keep adjusting brightness manually to gain any semblance of HDR from the experience. Of course effective local dimming is by far the best thing to have for a convincing HDR experience, but even when it’s absent effective Dynamic Contrast aided by HDR metadata can help. The ViewSonic XG270QC, for example, put this to very good use – it also had a significantly improved peak luminance compared to this model and avoided the undersaturated look to many shades that we observed here. It’s VESA DisplayHDR certified just like this model, but the HDR experience was markedly better in comparison. The HDR experience using Shadow of the Tomb Raider as an example is explored in the section of video review below. We’ve now tested a wide range of curved screens with varying curvatures and screen sizes. With its 31.5” screen and 1500R curve, there’s a moderate effect from the curvature when viewing the G32QC at a desk. But it’s something we found very easy to adapt to, soon forgetting it’s even there at all. It creates a slight feeling of extra depth when using the monitor and draws you in a little bit, but in no way felt unnatural to us and didn’t give a distorted look to things. The curve also has the potential to slightly enhance viewing comfort. Some users have legitimate reasons for chasing geometric perfection or may spend a lot of time viewing the monitor from a decentralised angle, in which case a flat model could make more sense. But most fear it unnecessarily, as the effect appears greatly exaggerated based on pictures and videos of curved monitors. With a 2560 x 1440 WQHD) resolution spread across a 31.5” screen, the pixel density of the monitor (93.24 PPI or Pixels Per Inch) isn’t all that high compared to smaller WQHD models or most ‘4K’ UHD monitors. It’s similar to a ~24” Full HD monitor, which many users are quite comfortable with. You don’t have the same clarity or detail potential as with tighter pixel densities, but it’s still pretty decent in that respect. The images below give an idea of the desktop real-estate and multi-tasking, but in no way represent the actual experience of viewing the monitor in person. It may be desirable or necessary to run the monitor below its native 2560 x 1440 (WQHD). Perhaps for performance reasons, or because a system such as a games console is being used that doesn’t support the WQHD resolution. The monitor provides scaling functionality via both DP and HDMI. It can be run at resolutions such as 1920 x 1080 (Full HD) and can use an interpolation (scaling) process to map the image onto all pixels of the screen. The refresh rates supported were covered at the end of the ‘Features and aesthetics’ section. As noted there a ‘4K’ UHD downsampling mode is also offered when using HDMI, at up to 60Hz. To ensure the monitor rather than GPU is handling the scaling process, as a PC user, you need to ensure the GPU driver is correctly configured so that the GPU doesn’t take over the scaling process. For AMD GPU users, the driver is set up correctly by default to allow the monitor to interpolate where possible. Nvidia users should open Nvidia Control Panel and navigate to ‘Display – Adjust desktop size and position’. Ensure that ‘No Scaling’ is selected and ‘Perform scaling on:’ is set to ‘Display’ as shown in the following image. The monitor offers various ‘Display Mode’ settings in the ‘Gaming’ section of the OSD, as explored in the OSD video. A setting of particular interest is the ‘1:1’ pixel mapping feature, only accessible if ‘AMD FreeSync Premium Pro’ is disabled in the OSD. The monitor will only use the pixels called for in the source resolution to display the image, with a black border around the image using the remaining pixels. The other key setting is the default ‘Full’ setting, which uses interpolation to map the source resolution onto the 2560 x 1440 pixels of the display. When running at 1920 x 1080 (Full HD), there was a moderate degree of softening compared to viewing a native Full HD screen. You could offset this by using the ‘Sharpness’ or ‘Super Resolution’ features in the OSD, which both offer a slightly different sharpness filter. We found setting ‘Super Resolution’ to ‘1’ worked quite well. It didn’t quite look like a native Full HD screen of the size, but it provided a relatively crisp and detailed look. It was certainly effective in offsetting the moderate softening observed initially. With this flexibility and the overall performance here, we consider this to be a relatively strong and suitably adjustable interpolation process and better than many we’ve come across on WQHD models. As usual, if you’re running the monitor at 2560 x 1440 and viewing 1920 x 1080 content (for example a video over the internet or a Blu-ray, using movie software) then it is the GPU and software that handles the upscaling. That’s got nothing to do with the monitor itself – there is a little bit of softening to the image compared to viewing such content on a native Full HD monitor, but it’s not extreme and shouldn’t bother most users. The video below shows the monitor in action. The camera, processing done and your own screen all affect the output – so it doesn’t accurately represent what you’d see when viewing the monitor in person. It still provides useful visual demonstrations and explanations which help reinforce some of the key points raised in the written piece. Timestamps: Features & Aesthetics Contrast Colour reproduction HDR (High Dynamic Range) Responsiveness (General) Responsiveness (Adaptive-Sync) For those wanting an immersive experience with a reasonable but not extreme pixel density and not overly taxing resolution, ~32” 2560 x 1440 (WQHD) models can be very attractive. The G32QC delivers this, coupling it with a 1500R curve to help draw you in a little bit more. We found the experience immersive but in no way unnatural or overbearing. The amount of ‘desktop real estate’ the resolution provides was also easy to appreciate. The pixel density wasn’t as high as a 27” WQHD model and didn’t give quite the same detail and clarity during normal use– and fell well short of a ~32” ‘4K’ UHD model in that respect. But with a similar pixel density to a ~24” Full HD model, you’re at a decent level that many are quite comfortable with. The monitor offered much more basic styling than those in the AORUS sub-brand. The monitor was slightly limited ergonomically, to just a bit of height and tilt adjustment – but this was still an improvement over some tilt-only solutions. The overall construction of the monitor was quite basic, in particular the stand base which certainly didn’t have a premium ‘feel’ to it. As usual for the panel type, contrast was the main strength. We measured static contrast that slightly exceeded the already decent specified values. This gave darker scenes improved depth and atmosphere compared to TN or IPS panels and indeed some VA models in this respect. There was a moderate amount of ‘VA glow’, lightening things up a bit towards the edges – particularly near the bottom from our preferred viewing position. This was brought out more on our unit by moderately strong clouding in that region as well. But this level of clouding isn’t something all units will suffer from. There was a bit of ‘black crush’ and some perceived gamma shifts that affected dark detail levels, but not extreme for a VA model of the size and nothing we felt took too much away from the clear strengths. The screen surface was light and had a relatively smooth surface texture for a matte finish, keeping things free from obvious graininess and preventing an obvious layered appearance in front of the image. The monitor produced a fairly vibrant and varied image overall, with reasonable extension beyond the sRGB colour space (90% DCI-P3 recorded). Some saturation was lost peripherally, particularly lower down the screen from our preferred viewing position, but this was by no means extreme for the panel type and size. Indeed, it was far less pronounced than the vertical shifts on even a significantly smaller TN model. The monitor offers a degree of HDR support, with VESA DisplayHDR 400 certification. The overall experience was quite basic. The colour gamut was put to good use under HDR and 10-bit processing could be used in the pipeline to enhanced the nuanced shade variety. But the lack of any local dimming or even Dynamic Contrast under HDR detracted from the overall experience. Coupled with a relatively limited upper end luminance for HDR, things didn’t really have a particularly ‘HDR-like’ appearance. We also found some shades more subdued than we’d expect given the gamut and panel technology used, under HDR. Responsiveness was good in some respects. The monitor offered low input lag and a 165Hz refresh rate, giving a nice ‘connected feel’ and reducing perceived blur due to eye movement. There were some significant weaknesses when it came to pixel responsiveness. Not the most extreme we’ve seen from the panel type by any means, but a touch weaker than those 27” WQHD models we tested a little earlier than this one. Models which are hardly speed demons when it comes to pixel responsiveness. There was some noticeable ‘smeary’ trailing where dark shades were involved in the transition and some fairly widespread ‘powdery’ trailing for other transitions. There was a bit of overshoot as well, but nothing we found disturbing using the optimal ‘Overdrive’ setting. The monitor offered Adaptive-Sync which provided support for AMD FreeSync and Nvidia’s ‘G-SYNC Compatible Mode’. Aside from a few hiccups with our dated Nvidia GPU (not unique to this monitor), the technology worked nicely and helped reduce or remove stuttering from frame and refresh rate mismatches. If you’re that way inclined the monitor offers a strobe backlight setting instead (Aim Stabilizer), but this was one of the weaker strobe backlight settings we’ve come across. Overall, we feel this is a well-priced monitor that gives you a lot of screen for your money. If you’re primarily interested in a strong contrast performance and want a large screen for an immersive experience, this model could well deliver what you’re after. It gives you pretty vibrant colour output on top of that – although the sRGB emulation setting for those who want to tone things down a bit was not too well-tuned. At least not on our unit. If you’re sensitive to weaknesses in pixel responsiveness, enjoy the strongest colour consistency or want a model with strong HDR performance then this isn’t really the model for you. Then again, you’ll struggle to find anything substantially better than the G32QC in those areas without compromising elsewhere. The bottom line; a large monitor that provides strong contrast, a high refresh rate and an immersive experience – but not ideal for lovers of strong pixel responsiveness or strong HDR. As an Amazon Associate I earn from qualifying purchases made using the below link. Where possible, you’ll be redirected to your nearest store. Further information on supporting our work. Buy from Amazon

FreeSync – the technology and activating it

The Gigabyte supports a variable refresh rate range of 48 – 165Hz. That means that if the game is running between 48fps and 165fps, the monitor will adjust its refresh rate to match. When the frame rate rises above 165fps, the monitor will stay at 165Hz and the GPU will respect your selection of ‘VSync on’ or ‘VSync off’ in the graphics driver. With ‘VSync on’ the frame rate will not be allowed to rise above 165fps, at which point VSync activates and imposes the usual associated latency penalty. With ‘VSync off’ the frame rate is free to climb as high as the GPU will output (potentially >165fps). AMD LFC (Low Framerate Compensation) is also supported by this model, which means that the refresh rate will stick to multiples of the frame rate where it falls below the 48Hz (48fps) floor of operation for FreeSync. If a game ran at 37fps, for example, the refresh rate would be 74Hz to help keep tearing and stuttering at bay. LFC sometimes seemed to kick in a bit closer to 52Hz (52fps), but this makes little difference in practice. This feature is used regardless of VSync setting, so it’s only above the ceiling of operation where the VSync setting makes a difference.

The Gigabyte supports a variable refresh rate range of 48 – 165Hz. That means that if the game is running between 48fps and 165fps, the monitor will adjust its refresh rate to match. When the frame rate rises above 165fps, the monitor will stay at 165Hz and the GPU will respect your selection of ‘VSync on’ or ‘VSync off’ in the graphics driver. With ‘VSync on’ the frame rate will not be allowed to rise above 165fps, at which point VSync activates and imposes the usual associated latency penalty. With ‘VSync off’ the frame rate is free to climb as high as the GPU will output (potentially >165fps). AMD LFC (Low Framerate Compensation) is also supported by this model, which means that the refresh rate will stick to multiples of the frame rate where it falls below the 48Hz (48fps) floor of operation for FreeSync. If a game ran at 37fps, for example, the refresh rate would be 74Hz to help keep tearing and stuttering at bay. LFC sometimes seemed to kick in a bit closer to 52Hz (52fps), but this makes little difference in practice. This feature is used regardless of VSync setting, so it’s only above the ceiling of operation where the VSync setting makes a difference. Some users prefer to leave VSync enabled but use a frame rate limiter set a few frames below the maximum supported (e.g. 162fps) instead, avoiding any VSync latency penalty at frame rates near the ceiling of operation or tearing from frame rates rising above the refresh rate. If you go to ‘Game Assist’ and activate the ‘Refresh Rate’ feature, the monitor will display the refresh rate. This will coincide with the frame rate of the content if the monitor is within the main VRR window. The counter reacts very rapidly to changes in frame rate. Finally, it’s worth noting that FreeSync only removes stuttering or juddering related to mismatches between frame rate and refresh rate. It can’t compensate for other interruptions to smooth game play, for example network latency or insufficient system memory. Some game engines will also show stuttering (or ‘hitching’) for various other reasons which won’t be eliminated by the technology.

Some users prefer to leave VSync enabled but use a frame rate limiter set a few frames below the maximum supported (e.g. 162fps) instead, avoiding any VSync latency penalty at frame rates near the ceiling of operation or tearing from frame rates rising above the refresh rate. If you go to ‘Game Assist’ and activate the ‘Refresh Rate’ feature, the monitor will display the refresh rate. This will coincide with the frame rate of the content if the monitor is within the main VRR window. The counter reacts very rapidly to changes in frame rate. Finally, it’s worth noting that FreeSync only removes stuttering or juddering related to mismatches between frame rate and refresh rate. It can’t compensate for other interruptions to smooth game play, for example network latency or insufficient system memory. Some game engines will also show stuttering (or ‘hitching’) for various other reasons which won’t be eliminated by the technology. FreeSync – the experience

Nvidia Adaptive-Sync (‘G-SYNC Compatible’)

You will also see in the image above that it states: “Selected Display is not validated as G-SYNC Compatible.” This means Nvidia hasn’t specifically tested and validated the display. On our GTX 10 Series GPU (GTX 1080 Ti), the experience was similar to what we described with FreeSync in some respects. The floor of operation was 65fps (65Hz) rather than 48fps (48Hz), but frame rate to refresh rate multiplication was used below this. We observed similar stuttering episodes and flickering when this boundary was crossed – and again observed some flickering during significant frame rate fluctuation. There were some additional issues we came across. The technology frequently disabled itself momentarily, producing some fairly frequent stuttering. This wasn’t as frequent or obvious as with the technology disabled and VSync enabled. Newer GPUs such as the RTX 20 and RTX 30 series may fare better. We’ve observed various issues on other monitors using ‘G-SYNC Compatible Mode’ that seem to only apply to older GPUs like ours but not newer generation GPUs. Either way, we still found the technology useful and preferred having it enabled over disabled.

You will also see in the image above that it states: “Selected Display is not validated as G-SYNC Compatible.” This means Nvidia hasn’t specifically tested and validated the display. On our GTX 10 Series GPU (GTX 1080 Ti), the experience was similar to what we described with FreeSync in some respects. The floor of operation was 65fps (65Hz) rather than 48fps (48Hz), but frame rate to refresh rate multiplication was used below this. We observed similar stuttering episodes and flickering when this boundary was crossed – and again observed some flickering during significant frame rate fluctuation. There were some additional issues we came across. The technology frequently disabled itself momentarily, producing some fairly frequent stuttering. This wasn’t as frequent or obvious as with the technology disabled and VSync enabled. Newer GPUs such as the RTX 20 and RTX 30 series may fare better. We’ve observed various issues on other monitors using ‘G-SYNC Compatible Mode’ that seem to only apply to older GPUs like ours but not newer generation GPUs. Either way, we still found the technology useful and preferred having it enabled over disabled. Finally, remember that you can use the ‘Refresh Rate’ feature in the ‘Game Assist’ section of the OSD to display the current refresh rate of the monitor. This will reflect the frame rate if it’s within the main variable refresh rate window, with the additional fluctuations described above possible depending on your GPU. And as with AMD FreeSync, HDR can be used at the same time as ‘G-SYNC Compatible Mode’.

Finally, remember that you can use the ‘Refresh Rate’ feature in the ‘Game Assist’ section of the OSD to display the current refresh rate of the monitor. This will reflect the frame rate if it’s within the main variable refresh rate window, with the additional fluctuations described above possible depending on your GPU. And as with AMD FreeSync, HDR can be used at the same time as ‘G-SYNC Compatible Mode’. Aim Stabilizer

HDR (High Dynamic Range)

Colour gamut 'Test Settings'

The HDR10 pipeline makes use of 10-bits per colour channel, which the monitor supports via 8-bit + FRC. At some refresh rates (144Hz+ for DP, 120Hz+ for HDMI) the dithering stage is offloaded to the GPU at the native resolution, for bandwidth reasons. We’ve carefully observed a range of content (including fine gradients) on a broad range of monitors where 10-bit is supported monitor side (usually 8-bit + FRC) and where the GPU handles the dithering under HDR. Including comparisons with a given model where the monitor handles the dithering at some refresh rates and the GPU handles it at others, due to bandwidth limitations. Regardless of the method used to achieve the ’10-bit’ colour signal, we find the result very similar. Some subtle differences may be noticed during careful side by side comparison of very specific content, but things are really handled very well even if GPU dithering is used. The enhanced precision of the 10-bit signal aids the nuanced shade variety, most noticeable at the high end (bright shades) in this case. For dark scenes the superior array of closely matching dark shades added some variety, but as we explore shortly there was a certain lack of depth under HDR that meant the benefits of this were lost to a fair extent. The image below is one of our favourites from Shadow of the Tomb Raider for highlighting a strong HDR performance. The photograph below was taken on a different monitor, it’s just to illustrate the scene being described here.

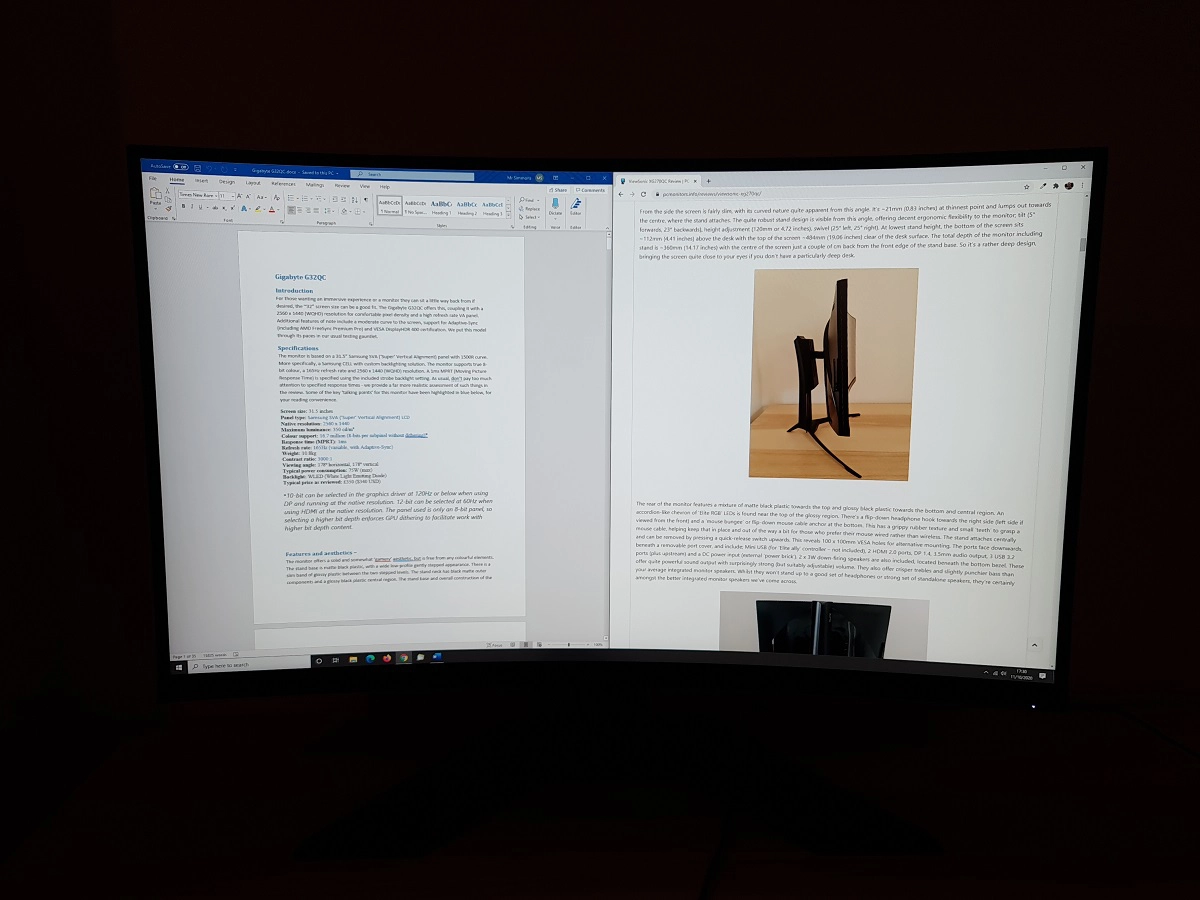

The curve and resolution

The curve makes the gaming experience a bit more engrossing, but again not unnatural. It’s something you soon forget is even there at all, it just seems to ‘work’. The effect is more noticeable on relatively large screens like this, although not as pronounced as on even wider screens such as UltraWides or indeed models with steeper curvature. As noted earlier the pixel density is similar to a ~24 Full HD model, so a level that many are quite comfortable with but not offering the same clarity and detail as significantly tighter pixel densities. The images below show various games running on the monitor, but in no way accurately represent how the monitor appears in person.

The curve makes the gaming experience a bit more engrossing, but again not unnatural. It’s something you soon forget is even there at all, it just seems to ‘work’. The effect is more noticeable on relatively large screens like this, although not as pronounced as on even wider screens such as UltraWides or indeed models with steeper curvature. As noted earlier the pixel density is similar to a ~24 Full HD model, so a level that many are quite comfortable with but not offering the same clarity and detail as significantly tighter pixel densities. The images below show various games running on the monitor, but in no way accurately represent how the monitor appears in person.

Interpolation and upscaling

Video review

Conclusion

Positives Negatives Decent ‘out of the box’ performance with excellent OSD flexibility, fairly vibrant colour output due to some extension beyond sRGB – without heavy oversaturation sRGB emulation mode poorly tuned for gamma (not adjustable in OSD), although brightness is adjustable. Colour gamut not as generous as some models Strong static contrast and a light and relatively smooth screen surface, providing a decent look to both darker and lighter content ‘VA glow’ affects atmosphere in dimmer conditions, particularly further down the screen. A little ‘black crush’ and quite a limited HDR experience A 165Hz refresh rate and low input lag, with Adaptive-Sync support to help remove tearing and stuttering Fairly clear and widespread pixel response time weaknesses. Moderate overshoot for some transitions, but not extreme or widespread. Some issues with Adaptive-Sync on our GTX 10 series GPU, but still useful to have Height adjustment from the stand and a large screen format with decent resolution, providing the sort of immersive experience and real-estate some are after Basic overall build quality, particularly non-premium stand base ‘feel’ and pixel density not particularly high