Install Apache Hadoop / HBase On Ubuntu 20.04

Có thể bạn quan tâm

This tutorial will try to explain the steps to install Hadoop and HBase on Ubuntu 20.04 (Focal Fossa) Linux server?. HBase is an open-source distributed non-relational database written in Java and runs on top of Hadoop File Systems (HDFS). The HBase allows you to run huge clusters hosting very large tables with billions of rows and millions of columns on top of commodity hardware.

This installation guide is not geared for highly available production setup, but suited for Lab setup to enable you do development. Our HBase installation will be done on a Single Node Hadoop Cluster. The server is an Ubuntu 20.04 virtual machine with below specs:

- 16GB Ram

- 8vCPU.

- 20GB Boot disk

- 100GB Raw disk for Data storage

If your resources doesn’t match this Lab setup, you can work with what you have and see if the services will be able to start.

For CentOS 7, refer to How to Install Apache Hadoop / HBase on CentOS 7

Install Hadoop on Ubuntu 20.04

The first section will cover the installation of a Single node Hadoop cluster on Ubuntu 20.04 LTS Server. The installation of Ubuntu 20.04 server is outside the scope of this guide. Consult your virtualization environment documentations for how to.

Step 1: Update System

Update and optionally upgrade all the packages installed on your Ubuntu system:sudo apt update sudo apt -y upgrade sudo reboot

Step 2: Install Java on Ubuntu 20.04

Install Java if it is missing on your Ubuntu 20.04 system.sudo apt update sudo apt install default-jdk default-jre

After successfully installing Java on Ubuntu 20.04, confirm the version with the java command line.$ java -version openjdk version "11.0.7" 2020-04-14 OpenJDK Runtime Environment (build 11.0.7+10-post-Ubuntu-3ubuntu1) OpenJDK 64-Bit Server VM (build 11.0.7+10-post-Ubuntu-3ubuntu1, mixed mode, sharing)

Set JAVA_HOME variable.cat <<EOF | sudo tee /etc/profile.d/hadoop_java.sh export JAVA_HOME=\$(dirname \$(dirname \$(readlink \$(readlink \$(which javac))))) export PATH=\$PATH:\$JAVA_HOME/bin EOF

Update your $PATH and setting.source /etc/profile.d/hadoop_java.sh

Then test:$ echo $JAVA_HOME /usr/lib/jvm/java-11-openjdk-amd64

Ref:

How to set default Java version on Ubuntu / Debian

Step 3: Create a User Account for Hadoop

Let’s create a separate user for Hadoop so we have isolation between the Hadoop file system and the Unix file system.sudo adduser hadoop sudo usermod -aG sudo hadoop sudo usermod -aG sudo hadoop

Once the user is added, generate SS key pair for the user.$ sudo su - hadoop$ ssh-keygen -t rsa Generating public/private rsa key pair. Enter file in which to save the key (/home/hadoop/.ssh/id_rsa): Created directory '/home/hadoop/.ssh'. Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /home/hadoop/.ssh/id_rsa. Your public key has been saved in /home/hadoop/.ssh/id_rsa.pub. The key fingerprint is: SHA256:mA1b0nzdKcwv/LPktvlA5R9LyNe9UWt+z1z0AjzySt4 hadoop@hbase The key's randomart image is: +---[RSA 2048]----+ | | | o + . . | | o + . = o o| | O . o.o.o=| | + S . *ooB=| | o *=.B| | . . *+=| | o o o.O+| | o E.=o=| +----[SHA256]-----+

Add this user’s key to list of Authorized ssh keys.cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys chmod 0600 ~/.ssh/authorized_keys

Verify that you can ssh using added key.$ ssh localhost The authenticity of host 'localhost (127.0.0.1)' can't be established. ECDSA key fingerprint is SHA256:42Mx+I3isUOWTzFsuA0ikhNN+cJhxUYzttlZ879y+QI. Are you sure you want to continue connecting (yes/no/[fingerprint])? yes Warning: Permanently added 'localhost' (ECDSA) to the list of known hosts. Welcome to Ubuntu 20.04 LTS (GNU/Linux 5.4.0-28-generic x86_64) * Documentation: https://help.ubuntu.com * Management: https://landscape.canonical.com * Support: https://ubuntu.com/advantage The programs included with the Ubuntu system are free software; the exact distribution terms for each program are described in the individual files in /usr/share/doc/*/copyright. Ubuntu comes with ABSOLUTELY NO WARRANTY, to the extent permitted by applicable law. $ exit

Step 4: Download and Install Hadoop

Check for the most recent version of Hadoop before downloading version specified here. As of this writing, this is version 3.2.1.

Save the recent version to a variable.RELEASE="3.2.1"

Then download Hadoop archive to your local system.wget https://www-eu.apache.org/dist/hadoop/common/hadoop-$RELEASE/hadoop-$RELEASE.tar.gz

Extract the file.tar -xzvf hadoop-$RELEASE.tar.gz

Move resulting directory to /usr/local/hadoop.sudo mv hadoop-$RELEASE/ /usr/local/hadoop sudo mkdir /usr/local/hadoop/logs sudo chown -R hadoop:hadoop /usr/local/hadoop

Set HADOOP_HOME and add directory with Hadoop binaries to your $PATH.cat <<EOF | sudo tee /etc/profile.d/hadoop_java.sh export JAVA_HOME=\$(dirname \$(dirname \$(readlink \$(readlink \$(which javac))))) export HADOOP_HOME=/usr/local/hadoop export HADOOP_HDFS_HOME=\$HADOOP_HOME export HADOOP_MAPRED_HOME=\$HADOOP_HOME export YARN_HOME=\$HADOOP_HOME export HADOOP_COMMON_HOME=\$HADOOP_HOME export HADOOP_COMMON_LIB_NATIVE_DIR=\$HADOOP_HOME/lib/native export PATH=\$PATH:\$JAVA_HOME/bin:\$HADOOP_HOME/bin:\$HADOOP_HOME/sbin EOF

Source file.source /etc/profile.d/hadoop_java.sh

Confirm your Hadoop version.$ hadoop version Hadoop 3.2.1 Source code repository https://gitbox.apache.org/repos/asf/hadoop.git -r b3cbbb467e22ea829b3808f4b7b01d07e0bf3842 Compiled by rohithsharmaks on 2019-09-10T15:56Z Compiled with protoc 2.5.0 From source with checksum 776eaf9eee9c0ffc370bcbc1888737 This command was run using /usr/local/hadoop/share/hadoop/common/hadoop-common-3.2.1.jar

Step 5: Configure Hadoop

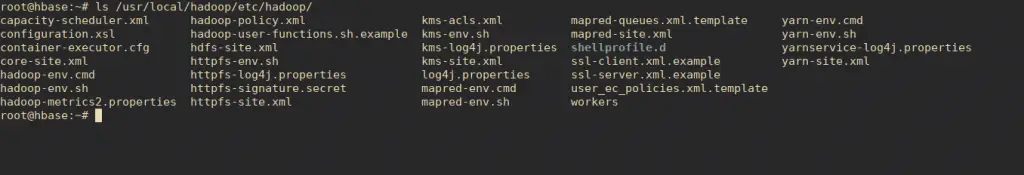

All your Hadoop configurations are located under /usr/local/hadoop/etc/hadoop/ directory.

A number of configuration files need to be modified to complete Hadoop installation on Ubuntu 20.04.

First edit JAVA_HOME in shell script hadoop-env.sh:$ sudo vim /usr/local/hadoop/etc/hadoop/hadoop-env.sh # Set JAVA_HOME - Line 54 export JAVA_HOME=/usr/lib/jvm/java-11-openjdk-amd64/

Then configure:

1. core-site.xml

The core-site.xml file contains Hadoop cluster information used when starting up. These properties include:

- The port number used for Hadoop instance

- The memory allocated for file system

- The memory limit for data storage

- The size of Read / Write buffers.

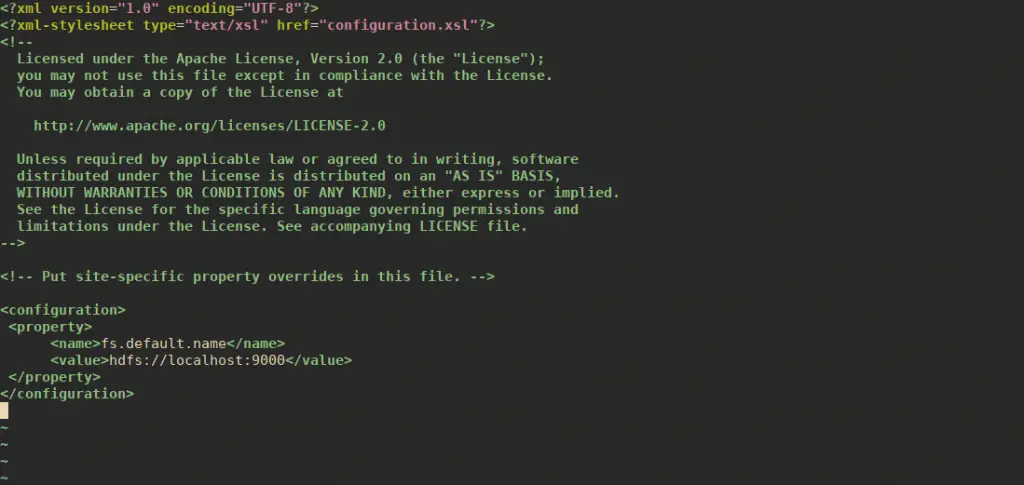

Open core-site.xmlsudo vim /usr/local/hadoop/etc/hadoop/core-site.xml

Add the following properties in between the <configuration> and </configuration> tags.<configuration> <property> <name>fs.default.name</name> <value>hdfs://localhost:9000</value> <description>The default file system URI</description> </property> </configuration>

See screenshot below.

2. hdfs-site.xml

This file needs to be configured for each host to be used in the cluster. This file holds information such as:

- The namenode and datanode paths on the local filesystem.

- The value of replication data

In this setup, I want to store Hadoop infrastructure in a secondary disk – /dev/sdb.$ lsblk NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT sda 8:0 0 76.3G 0 disk └─sda1 8:1 0 76.3G 0 part / sdb 8:16 0 100G 0 disk sr0 11:0 1 1024M 0 rom

I’ll partition and mount this disk to /hadoop directory.sudo parted -s -- /dev/sdb mklabel gpt sudo parted -s -a optimal -- /dev/sdb mkpart primary 0% 100% sudo parted -s -- /dev/sdb align-check optimal 1 sudo mkfs.xfs /dev/sdb1 sudo mkdir /hadoop echo "/dev/sdb1 /hadoop xfs defaults 0 0" | sudo tee -a /etc/fstab sudo mount -a

Confirm:$ df -hT | grep /dev/sdb1 /dev/sdb1 xfs 50G 84M 100G 1% /hadoop

Create directories for namenode and datanode.sudo mkdir -p /hadoop/hdfs/{namenode,datanode}

Set ownership to hadoop user and group.sudo chown -R hadoop:hadoop /hadoop

Now open the file:sudo vim /usr/local/hadoop/etc/hadoop/hdfs-site.xml

Then add the following properties in between the <configuration> and </configuration> tags.<configuration> <property> <name>dfs.replication</name> <value>1</value> </property> <property> <name>dfs.name.dir</name> <value>file:///hadoop/hdfs/namenode</value> </property> <property> <name>dfs.data.dir</name> <value>file:///hadoop/hdfs/datanode</value> </property> </configuration>

See screenshot below.

3. mapred-site.xml

This is where you set the MapReduce framework to use.sudo vim /usr/local/hadoop/etc/hadoop/mapred-site.xml

Set like below.<configuration> <property> <name>mapreduce.framework.name</name> <value>yarn</value> </property> </configuration>

4. yarn-site.xml

Settings in this file will overwrite the configurations for Hadoop yarn. It defines resource management and job scheduling logic.sudo vim /usr/local/hadoop/etc/hadoop/yarn-site.xml

Add:<configuration> <property> <name>yarn.nodemanager.aux-services</name> <value>mapreduce_shuffle</value> </property> </configuration>

Here is the screenshot of my configuration.

Step 6: Validate Hadoop Configurations

Initialize Hadoop Infrastructure store.sudo su - hadoop hdfs namenode -format

See output below:

Test HDFS configurations.$ start-dfs.sh Starting namenodes on [localhost] Starting datanodes Starting secondary namenodes [hbase] hbase: Warning: Permanently added 'hbase' (ECDSA) to the list of known hosts.

Lastly verify YARN configurations:$ start-yarn.shStarting resourcemanagerStarting nodemanagers

Hadoop 3.x defult Web UI ports:

- NameNode – Default HTTP port is 9870.

- ResourceManager – Default HTTP port is 8088.

- MapReduce JobHistory Server – Default HTTP port is 19888.

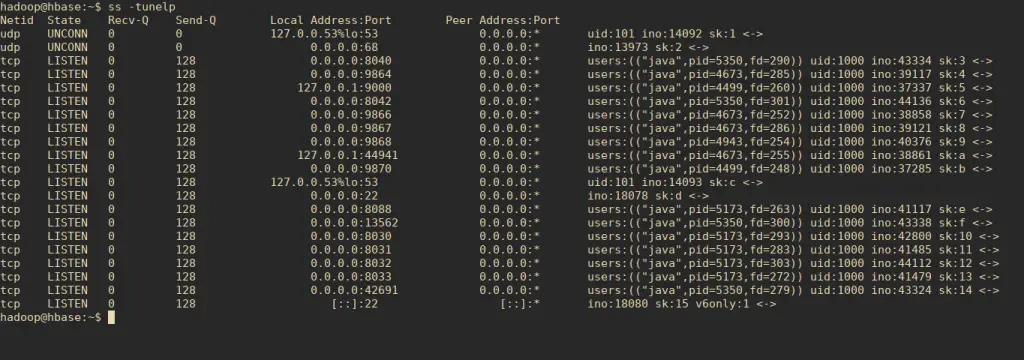

You can check ports used by hadoop using:$ ss -tunelp

Sample output is shown below.

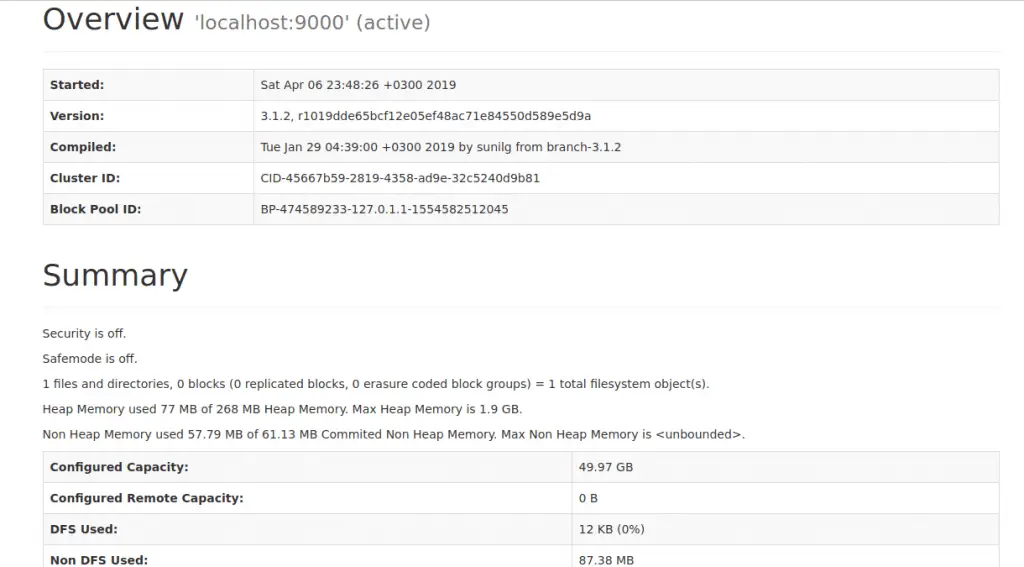

Access Hadoop Web Dashboard on http://ServerIP:9870.

Check Hadoop Cluster Overview at http://ServerIP:8088.

Test to see if you can create directory.$ hadoop fs -mkdir /test $ hadoop fs -ls / Found 1 items drwxr-xr-x - hadoop supergroup 0 2020-05-29 15:41 /test

Stopping Hadoop Services

Use the commands:$ stop-dfs.sh $ stop-yarn.sh

Install HBase on Ubuntu 20.04

You can choose to install HBase in Standalone Mode or Pseudo-Distributed Mode. The setup process is similar to our Hadoop installation.

Step 1: Download and Install HBase

Check latest release or Stable release version before you download. For production use, I recommend you go with Stable release.VER="2.2.4" wget http://apache.mirror.gtcomm.net/hbase/stable/hbase-$VER-bin.tar.gz

Extract Hbase archive downloaded.tar xvf hbase-$VER-bin.tar.gz sudo mv hbase-$VER/ /usr/local/HBase/

Update your $PATH values.cat <<EOF | sudo tee /etc/profile.d/hadoop_java.sh export JAVA_HOME=\$(dirname \$(dirname \$(readlink \$(readlink \$(which javac))))) export HADOOP_HOME=/usr/local/hadoop export HADOOP_HDFS_HOME=\$HADOOP_HOME export HADOOP_MAPRED_HOME=\$HADOOP_HOME export YARN_HOME=\$HADOOP_HOME export HADOOP_COMMON_HOME=\$HADOOP_HOME export HADOOP_COMMON_LIB_NATIVE_DIR=\$HADOOP_HOME/lib/native export HBASE_HOME=/usr/local/HBase export PATH=\$PATH:\$JAVA_HOME/bin:\$HADOOP_HOME/bin:\$HADOOP_HOME/sbin:\$HBASE_HOME/bin EOF

Update your shell environment values.$ source /etc/profile.d/hadoop_java.sh$ echo $HBASE_HOME/usr/local/HBase

Edit JAVA_HOME in shell script hbase-env.sh:$ sudo vim /usr/local/HBase/conf/hbase-env.sh # Set JAVA_HOME - Line 27 export JAVA_HOME=/usr/lib/jvm/java-11-openjdk-amd64/

Step 2: Configure HBase

We will do configurations like we did for Hadoop. All configuration files for HBase are located on /usr/local/HBase/conf/ directory.

hbase-site.xml

Set data directory to an appropriate location on this file.

Option 1: Install HBase in Standalone Mode (Not recommended)

In standalone mode all daemons (HMaster, HRegionServer, and ZooKeeper) ran in one jvm process/instance

Create HBase root directory.sudo mkdir -p /hadoop/HBase/HFiles sudo mkdir -p /hadoop/zookeeper sudo chown -R hadoop:hadoop /hadoop/

Open the file for editing.sudo vim /usr/local/HBase/conf/hbase-site.xml

Now add the following configurations between the <configuration> and </configuration> tags to look like below.<configuration> <property> <name>hbase.rootdir</name> <value>file:/hadoop/HBase/HFiles</value> </property> <property> <name>hbase.zookeeper.property.dataDir</name> <value>/hadoop/zookeeper</value> </property> </configuration>

By default, unless you configure the hbase.rootdir property, your data is still stored in /tmp/.

Now start HBase by using start-hbase.sh script in HBase bin directory.$ sudo su - hadoop$ start-hbase.sh running master, logging to /usr/local/HBase/logs/hbase-hadoop-master-hbase.out

Option 2: Install HBase in Pseudo-Distributed Mode (Recommended)

Our value of hbase.rootdir set earlier will start in Standalone Mode. Pseudo-distributed mode means that HBase still runs completely on a single host, but each HBase daemon (HMaster, HRegionServer, and ZooKeeper) runs as a separate process.

To install HBase in Pseudo-Distributed Mode, set its values to:<configuration> <property> <name>hbase.rootdir</name> <value>hdfs://localhost:8030/hbase</value> </property> <property> <name>hbase.zookeeper.property.dataDir</name> <value>/hadoop/zookeeper</value> </property> <property> <name>hbase.cluster.distributed</name> <value>true</value> </property> </configuration>

In this setup, Data is stored your data in HDFS instead.

Ensure Zookeeper directory is created.sudo mkdir -p /hadoop/zookeeper sudo chown -R hadoop:hadoop /hadoop/

Now start HBase by using start-hbase.sh script in HBase bin directory.$ sudo su - hadoop $ start-hbase.sh localhost: running zookeeper, logging to /usr/local/HBase/bin/../logs/hbase-hadoop-zookeeper-hbase.out running master, logging to /usr/local/HBase/logs/hbase-hadoop-master-hbase.out : running regionserver, logging to /usr/local/HBase/logs/hbase-hadoop-regionserver-hbase.out

Check the HBase Directory in HDFS:$ hadoop fs -ls /hbaseFound 9 itemsdrwxr-xr-x - hadoop supergroup 0 2019-04-07 09:19 /hbase/.tmpdrwxr-xr-x - hadoop supergroup 0 2019-04-07 09:19 /hbase/MasterProcWALsdrwxr-xr-x - hadoop supergroup 0 2019-04-07 09:19 /hbase/WALsdrwxr-xr-x - hadoop supergroup 0 2019-04-07 09:17 /hbase/corruptdrwxr-xr-x - hadoop supergroup 0 2019-04-07 09:16 /hbase/datadrwxr-xr-x - hadoop supergroup 0 2019-04-07 09:16 /hbase/hbase-rw-r--r-- 1 hadoop supergroup 42 2019-04-07 09:16 /hbase/hbase.id-rw-r--r-- 1 hadoop supergroup 7 2019-04-07 09:16 /hbase/hbase.versiondrwxr-xr-x - hadoop supergroup 0 2019-04-07 09:17 /hbase/oldWALs

Step 3: Managing HMaster & HRegionServer

The HMaster server controls the HBase cluster. You can start up to 9 backup HMaster servers, which makes 10 total HMasters, counting the primary.

The HRegionServer manages the data in its StoreFiles as directed by the HMaster. Generally, one HRegionServer runs per node in the cluster. Running multiple HRegionServers on the same system can be useful for testing in pseudo-distributed mode.

Master and Region Servers can be started and stopped using the scripts local-master-backup.sh and local-regionservers.sh respectively.$ local-master-backup.sh start 2 # Start backup HMaster$ local-regionservers.sh start 3 # Start multiple RegionServers

- Each HMaster uses two ports (16000 and 16010 by default). The port offset is added to these ports, so using an offset of 2, the backup HMaster would use ports 16002 and 16012

The following command starts 3 backup servers using ports 16002/16012, 16003/16013, and 16005/16015.$ local-master-backup.sh start 2 3 5

- Each RegionServer requires two ports, and the default ports are 16020 and 16030

The following command starts four additional RegionServers, running on sequential ports starting at 16022/16032 (base ports 16020/16030 plus 2).$ local-regionservers.sh start 2 3 4 5

To stop, replace start parameter with stop for each command followed by the offset of the server to stop. Example.$ local-regionservers.sh stop 5

Starting HBase Shell

Hadoop and Hbase should be running before you can use HBase shell. Here the correct order of starting services.$ start-all.sh $ start-hbase.sh

Then use HBase shell.hadoop@hbase:~$ hbase shell2019-04-07 10:44:43,821 WARN [main] util.NativeCodeLoader: Unable to load native-hadoop library for your platform… using builtin-java classes where applicableSLF4J: Class path contains multiple SLF4J bindings.SLF4J: Found binding in [jar:file:/usr/local/HBase/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]SLF4J: Found binding in [jar:file:/usr/local/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]HBase ShellUse "help" to get list of supported commands.Use "exit" to quit this interactive shell.Version 1.4.9, rd625b212e46d01cb17db9ac2e9e927fdb201afa1, Wed Dec 5 11:54:10 PST 2018hbase(main):001:0>

Stopping HBase.stop-hbase.sh

You have successfully installed Hadoop and HBase on Ubuntu 20.04.

Books to Read:

Hadoop: The Definitive Guide: Storage and Analysis at Internet Scale

$64.99$21.37 in stock32 new from $15.3851 used from $1.49BUY NOWAmazon.comas of January 28, 2026 5:01 am

Hadoop Explained

out of stockBUY NOWAmazon.comas of January 28, 2026 5:01 amFeatures

| Release Date | 2014-06-16T00:00:00.000Z |

| Language | English |

| Number Of Pages | 156 |

| Publication Date | 2014-06-16T00:00:00.000Z |

| Format | Kindle eBook |

Hadoop Application Architectures

$49.99$10.29 in stock13 new from $6.3019 used from $4.47BUY NOWAmazon.comas of January 28, 2026 5:01 am

HBase: The Definitive Guide: Random Access to Your Planet-Size Data

$39.99$25.00 in stock12 new from $24.0022 used from $3.09BUY NOWAmazon.comas of January 28, 2026 5:01 amFeatures

| Part Number | 978-1-4493-9610-7 |

| Is Adult Product | |

| Edition | 1 |

| Language | English |

| Number Of Pages | 556 |

| Publication Date | 2011-09-23T00:00:01Z |

Big Data: Principles and best practices of scalable realtime data systems

$49.99$36.18 in stock10 new from $32.0838 used from $2.71Free shippingBUY NOWAmazon.comas of January 28, 2026 5:01 amFeatures

| Part Number | 43171-600463 |

| Is Adult Product | |

| Release Date | 2015-05-10T00:00:01Z |

| Edition | 1st |

| Language | English |

| Number Of Pages | 328 |

| Publication Date | 2015-05-10T00:00:01Z |

Designing Data-Intensive Applications: The Big Ideas Behind Reliable, Scalable, and Maintainable Systems

$59.99$37.00 in stock23 new from $33.008 used from $27.21Free shippingBUY NOWAmazon.comas of January 28, 2026 5:01 amFeatures

| Part Number | 41641073 |

| Edition | 1 |

| Language | English |

| Number Of Pages | 616 |

| Publication Date | 2017-04-11T00:00:01Z |

Reference:

- Apache Hadoop Documentation

- Apache HBase book

Từ khóa » Cài đặt Hbase

-

Hbase Là Gì? Hướng Dẫn Cài đặt Và Sử Dụng Hbase - ITNavi

-

Cài đặt Hadoop + HBase + Apache Phoenix - BlogDogy

-

Hướng Dẫn Cài đặt Hbase ở Chế độ Distributed - Technology - Tips

-

Tổng Quan Về Hbase Là Gì ? Hướng Dẫn Cài Đặt Và Sử Dụng Hbase

-

Dữ Liệu Lớn: Hbase Là Gì ? Hướng Dẫn Cài Đặt Và Sử Dụng Hbase

-

Cài đặt Hbase Trên Ubuntu - YouTube

-

Hbase Là Gì

-

Learn - HBase Database Cho Máy Tính PC Windows - AppChoPC

-

Hadoop HBase Tutorials Cho Máy Tính PC Windows - AppChoPC

-

Khi Nào Nên Sử Dụng Hadoop, Hbase, Hive Và Pig

-

HBase Performance Tuning | Ways For HBase Optimization - فيسبوك

-

Hbase Là Gì? Hướng Dẫn Cài đặt Và Sử Dụng Hbase

-

Cài đặt HBase ở Chế độ Phân Tán Giả Trên Ubuntu 13.4

-

Quickstart: Apache HBase & Apache Phoenix - Azure HDInsight